Relational Part-Aware Learning for Complex Composite Object Detection in High-Resolution Remote Sensing Images

Click here to get full paper: File:Relational Part-Aware Learning for Complex Composite Object Detection in High-Resolution Remote Sensing Images.pdf

Abstract

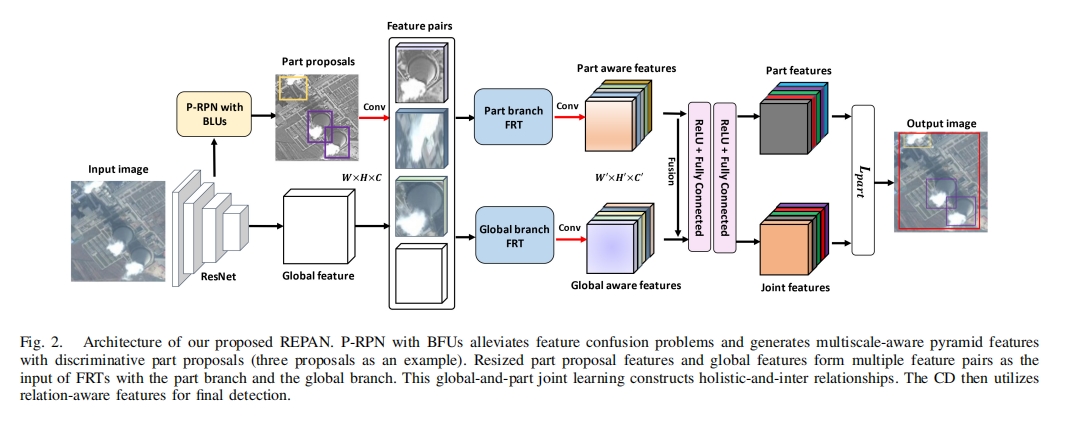

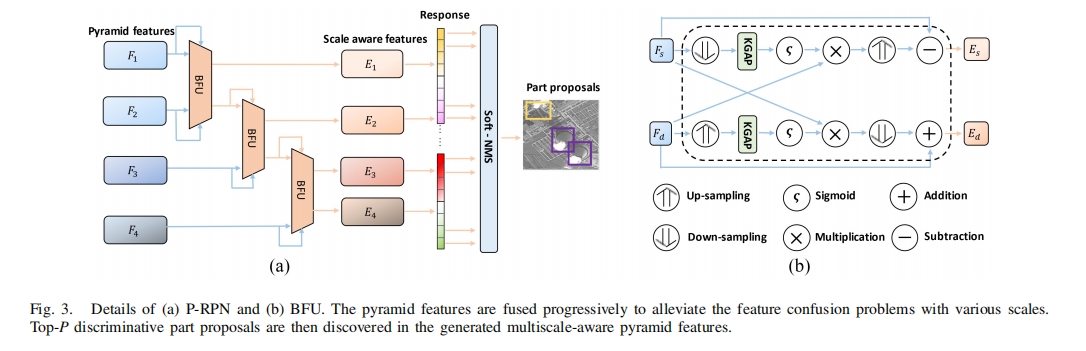

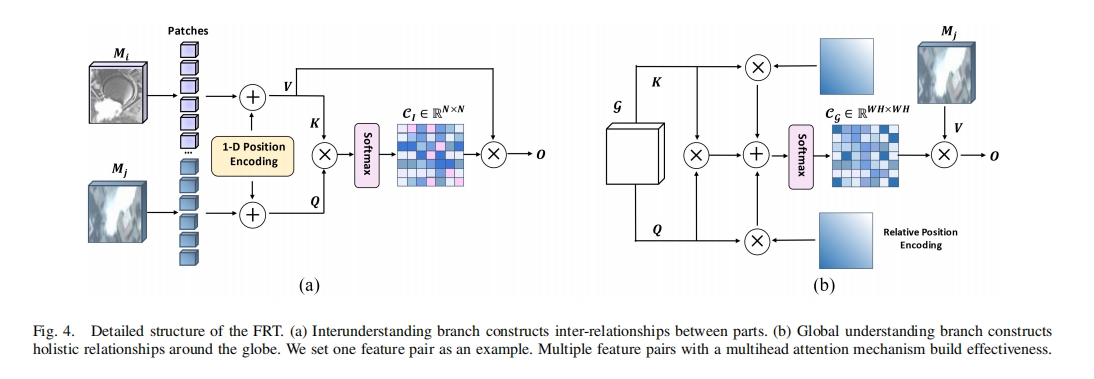

In high-resolution remote sensing images (RSIs), complex composite object detection (e.g., coal-fired power plant detection and harbor detection) is challenging due to multiple discrete parts with variable layouts leading to complex weak inter-relationship and blurred boundaries, instead of a clearly defined single object. To address this issue, this article proposes an end-to-end framework, i.e., relational part-aware network (REPAN), to explore the semantic correlation and extract discriminative features among multiple parts. Specifically, we first design a part region proposal network (P-RPN) to locate discriminative yet subtle regions. With butterfly units (BFUs) embedded, feature-scale confusion problems stemming from aliasing effects can be largely alleviated. Second, a feature relation Transformer (FRT) plumbs the depths of the spatial relationships by part-and-global joint learning, exploring correlations between various parts to enhance significant part representation. Finally, a contextual detector (CD) classifies and detects parts and the whole composite object through multirelation-aware features, where part information guides to locate the whole object. We collect three remote sensing object detection datasets with four categories to evaluate our method. Consistently surpassing the performance of state-of-the-art methods, the results of extensive experiments underscore the effectiveness and superiority of our proposed method.

Introduction

Object detection in remote sensing areas, one ofthe most interesting yet formidable issues, laying the groundwork for interpreting and understanding remote sensing images (RSIs) . Owing to the achievements in high-resolution RSIs datasets and deep learning algorithms,tremendous progress in the accuracy and efficiency of object detection in remote sensing has been witnessed. However, most of the existing algorithms are designed for clearly defined single-object detection like vehicle detection, yet overlooking many complex composite objects in optical RSIs (e.g., coal-fired power plant and airport) which we should think of as a whole. These complex composite objects provide essential support for society (e.g., power plants for electricity generation and airports for transportation), so monitoring them in RSIs is equally important. With a target to identify these combined complexes with multiple parts and nonrigid layouts, and the difficulties arising from the complicated background and blurred boundaries, it is a challenging research problem.

Compared with single-object detection, complex composite object detection in RSIs is difficult for two reasons and Fig. 1 shows the comparison between complex composite objects and single objects. First, these objects are characterized by intricate parts with various layouts. For example, a coal-fired power plant contains chimneys and condensing towers, and such complex detection target involves problems including complex spatial relationships between parts and nonrigid boundaries. Nonrigid boundaries can enlarge the sizes of bounding boxes and decrease the precision. The complex composite manner indicates the parts are discrete, and other textures between parts make the composite spatial relationships weak and disturbed, leading to difficulties in detecting a composite object as a whole precisely. Second, complex composite objects are frequently situated amidst surroundings with similar textures, further complicating detection. For instance, coal-fired power plants are often located in industrial areas where other similar industrial infrastructures may hamper coal-fired power plant

detection performance. Similar surroundings contribute to the blurred boundaries and puzzle the bounding box localization. Unlike single objects, such as cars or ships, which own a unified structure and a unified semantic meaning without significant internal complexity, composite objects, such as a coal-fired power plant, are characterized by a more complex structure composed of multiple semantic meanings with internal complexity. As the red lines and green ovals in Fig. 1(a) and (b), complex yet weak spatial inter-relationships and blurred boundaries caused by multiple components with various layouts make composite objects harder to detect than single objects.

Nevertheless, commonly used CNN-based object detection methods rely on feature extraction from local regions and use these features to generate bounding boxes. For composite object detection, these algorithms may fail to handle the semantic gap between low-level features and high-level understanding of objects caused by complex and diverse spatial inter-relationships between parts. Additionally, the highly variable appearance of parts makes it difficult to generalize across different instances of the same object. Consequently, the direct application of existing algorithms to composite object detection is ill-advised, and part-based methods are better for discovering discriminative and subtle components.

Part-based methods are used in fine-grained visual classi-fication tasks, aiming to generate rich feature representations or localize parts for feature enhancement. By modeling a complex structure as an assemblage of distinct parts that can be localized and recognized individually, part-based methods offer heightened efficacy for composite object detection. Recently, a few efforts have been made on part-based methods for composite object detection in RSIs. For example, Sun et al. proposed a unified part-based CNN-based network consisting of a part localization module and a context refinement module to localize the most representative part features. Although previous work has reached promising results, the attention to constraints on local feature learning and simple concatenation of part features lead to the regardless of discriminative parts and the potential in long-range spatial inter-relationships. We argue that investigating the potential correlation between parts and constructing a global semantic understanding of objects can significantly benefit composite object detection in RSIs.

Conclusion

In this article, we focus on the complex composite object detection in RSIs and propose a REPAN. We are the first to point out that composite object detection is based on part feature representation and spatial relationships. For part feature representation, we design a P-RPN to discover discriminative parts robustly and precisely by alleviating the feature confusion. For spatial relationships, it is the first time to propose an FRT to build the holistic and intersemantic correlation by global-and-part joint learning. The potential correlation between the globe and parts addresses the problems of complex weak spatial relationships and similar texture disturbance. With the relation-aware features generated by Transformers, the CD conducts final detection with promising performance. Evaluations of our collected dataset with four categories and the comprehensive ablation studies demonstrate the superiority of our proposed REPAN. In the future, we will continue to explore the large-scale application ability of our REPAN.