Main Page

Welcome Message

Hello! This is the homepage of the High Performance Geo-Computing (HPGC) research group in Tsinghua University. Here, we accumulate all kinds of information that may interest you, and the members of the group, :).

The name describes the major focus of the group, which is to motivate the interaction between HPC and geoscience. The goal is to bridge the gap between the grand challenges in earth science, and the emerging computing methods and hardware platforms. Now, we are also focusing on the collaborative application of HPC and AI.

In one way, better supercomputing technologies would lead to better solutions or even scientific breakthroughs; and in the other way, science improvements would also guide the development of future supercomputing technologies. Moreover, the interaction between these two, I believe, would lead to successful synergy of methods, data sets, perspectives, and ideas.

We currently have 1 professor, 1 postdoc, 5 PhD students and 1 undergraduate student.

We are Hiring!!!

We are constantly looking for students and postdocs.

Especially, we hope to recruit master's or doctoral students in computer science and technology, earth systems science, and other related majors at Tsinghua Shenzhen International Graduate School.

For postdoc, they would be working in our recently-started Tsinghua University - Xi'an Institute of Surveying and Mapping Joint Research Center for New Smart Surveying and Mapping or National Supercomputing Center in Shenzhen.

Postdocs and students in the group would receive the standard benefits provided by Tsinghua University, as well as a competitive additional package from the group.

Contact: haohuan@tsinghua.edu.cn, please send your CV, research statement, and 3 major publications.

Recent Research Highlights

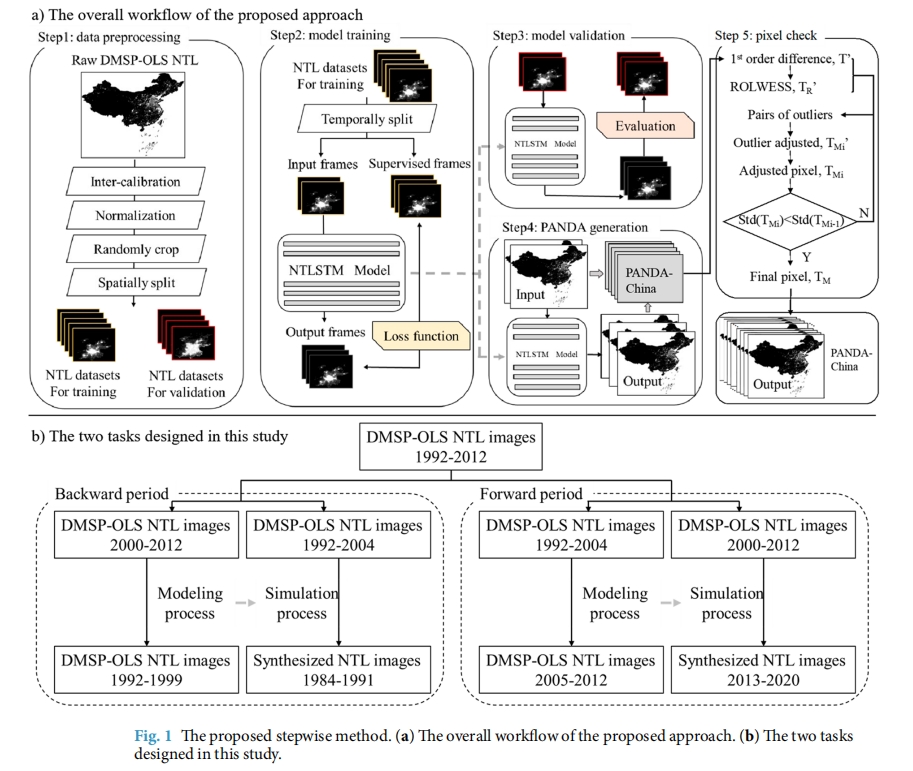

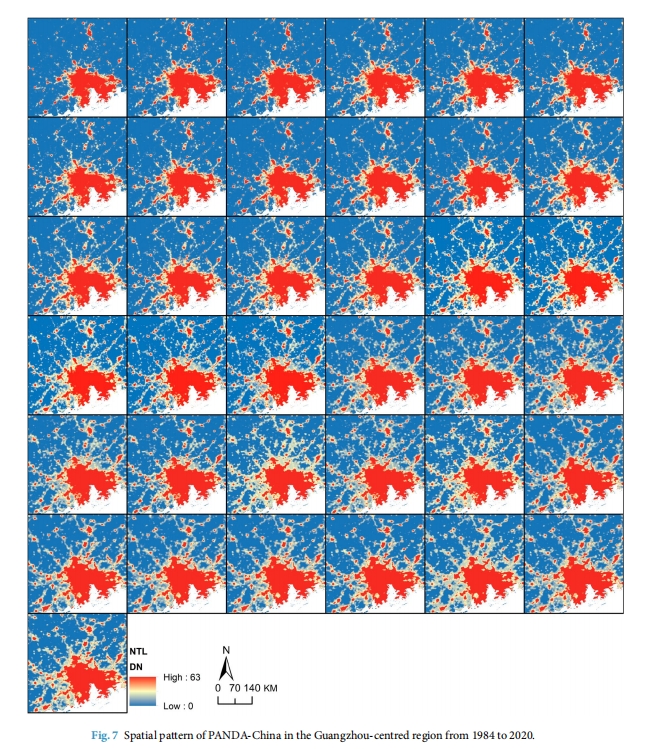

1.A prolonged artificial nighttime-light dataset of China (1984-2020)

Nighttime light remote sensing has been an increasingly important proxy for human activities. Despite an urgent need for long-term products and pilot explorations in synthesizing them, the publicly available long-term products are limited. A Night-Time Light convolutional LSTM network is proposed and applied the network to produce a 1-km annual Prolonged Artifcial Nighttime-light DAtaset of China (PANDA-China) from 1984 to 2020. Assessments between modeled and original images show that on average the RMSE reaches 0.73, the coefcient of determination (R2) reaches 0.95, and the linear slope is 0.99 at the pixel level, indicating a high confdence in the quality of generated data products. Quantitative and visual comparisons witness PANDA-China’s superiority against other NTL datasets in its signifcantly longer NTL dynamics, higher temporal consistency, and better correlations with socioeconomics (built-up areas, gross domestic product, population) characterizing the most relevant indicator in diferent development phases. The PANDA-China product provides an unprecedented opportunity to trace nighttime light dynamics in the past four decades.

2.DeepLight: Reconstructing High-Resolution Observations of Nighttime Light With Multi-Modal Remote Sensing Data

Nighttime light (NTL) remote sensing observation serves as a unique proxy for quantitatively assessing progress toward meeting a series of Sustainable Development Goals (SDGs), such as poverty estimation, urban sustainable development, and carbon emission. However, existing NTL observations often suffer from pervasive degradation and inconsistency, limiting their utility for computing the indicators defined by the SDGs.

In this research, we present a novel task of reconstructing high-resolution nighttime light images with multi-modality data. To support this research endeavor, we introduce DeepLightMD, a comprehensive dataset comprising data from five heterogeneous sensors, offering fine spatial resolution and rich spectral information at a national scale. Additionally, we present DeepLightSR, a calibration-aware method for building bridges between spatially heterogeneous modality data in the multi-modality super-resolution.

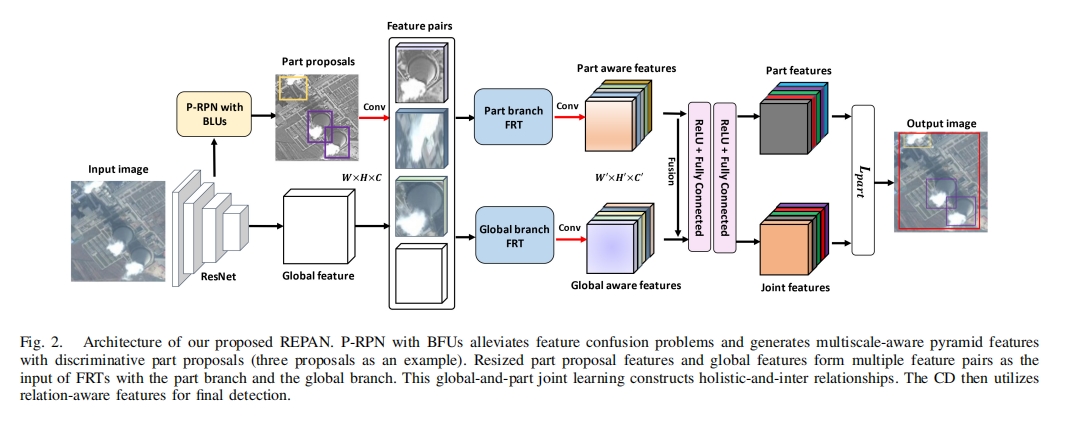

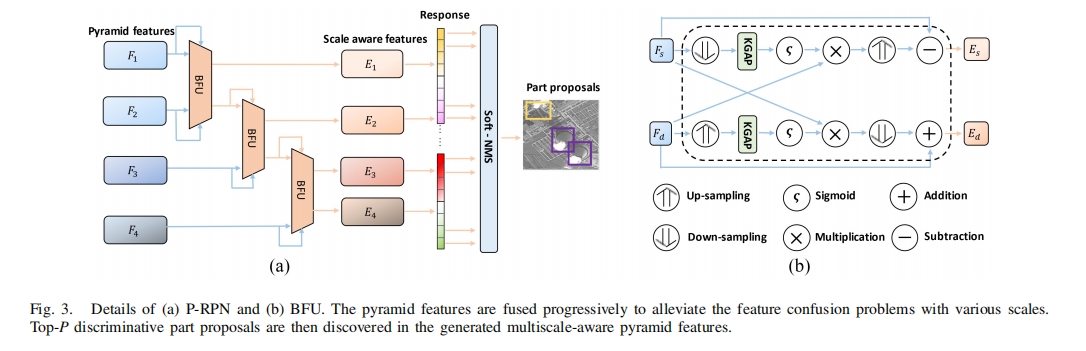

3.Relational Part-Aware Learning for Complex Composite Object Detection in High-Resolution Remote Sensing Images

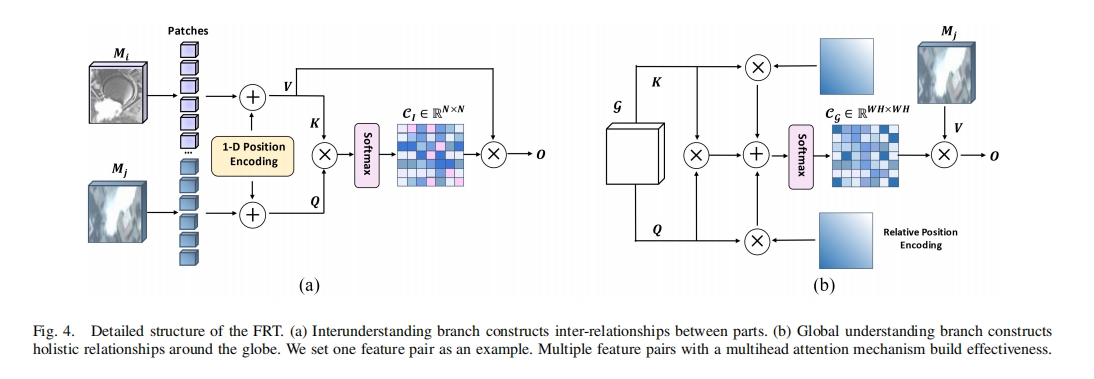

In high-resolution remote sensing images (RSIs), complex composite object detection (e.g., coal-fired power plant detection and harbor detection) is challenging due to multiple discrete parts with variable layouts leading to complex weak inter-relationship and blurred boundaries, instead of a clearly defined single object. To address this issue, this article proposes an end-to-end framework, i.e., relational part-aware network (REPAN), to explore the semantic correlation and extract discriminative features among multiple parts. Specifically, we first design a part region proposal network (P-RPN) to locate discriminative yet subtle regions. With butterfly units (BFUs) embedded, feature-scale confusion problems stemming from aliasing effects can be largely alleviated. Second, a feature relation Transformer (FRT) plumbs the depths of the spatial relationships by part-and-global joint learning, exploring correlations between various parts to enhance significant part representation. Finally, a contextual detector (CD) classifies and detects parts and the whole composite object through multirelation-aware features, where part information guides to locate the whole object. We collect three remote sensing object detection datasets with four categories to evaluate our method. Consistently surpassing the performance of state-of-the-art methods, the results of extensive experiments underscore the effectiveness and superiority of our proposed method.

In this article, we focus on the complex composite object detection in RSIs and propose a REPAN. We are the first to point out that composite object detection is based on part feature representation and spatial relationships. For part feature representation, we design a P-RPN to discover discriminative parts robustly and precisely by alleviating the feature confusion. For spatial relationships, it is the first time to propose an FRT to build the holistic and intersemantic correlation by global-and-part joint learning. The potential correlation between the globe and parts addresses the problems of complex weak spatial relationships and similar texture disturbance. With the relation-aware features generated by Transformers, the CD conducts final detection with promising performance. Evaluations of our collected dataset with four categories and the comprehensive ablation studies demonstrate the superiority of our proposed REPAN. In the future, we will continue to explore the large-scale application ability of our REPAN.