Building Bridges across Spatial and Temporal Resolutions: Reference-Based Super-Resolution via Change Priors and Conditional Diffusion Model

Click here to get full paper: File:Building Bridges across.pdf

Introduction

Spatiotemporal integrity of high-resolution remote sensing images is crucial for fine-grained urban management,long-time-series urban development study, disaster monitoring, and other remote sensing applications. However, due to limitations in remote sensing technologies and high hardware costs, we cannot simultaneously achieve high temporal resolution and high spatial resolution images on a large scale. To tackle this issue, reference-based super-resolution (RefSR) can leverage geography-paired high-resolution reference (Ref) images and low-resolution (LR) images to integrate fine spatial content and high revisit frequency from different sensors. Although various RefSR methods achieve greatprogress, two major challenges remain to be solved for this scenario.

The first challenge is the land cover changes between Ref and LR images. Unlike the natural image domain, where Ref images are collected through image retrieval or captured from different viewpoints, Ref and LR images in remote sensing scenarios utilize geographic information to match the same location. Existing methods implicitly capture the land cover changes between LR and Ref images by adaptive learning or attention-based transformers. However, the underuse or misuse problems of Ref information still exist in these methods.

The second challenge is the large spatial resolution gaps between remote sensing sensors (e.g., 8× to 16×). Existing RefSR methods are usually based on the generative adversarial network (GAN) and designed for a 4× scaling factor. They can hardly reconstruct and transfer the details in the face of large-factor super-resolution. In recent years, conditional diffusion models have demonstrated greater effectiveness in image super-resolution and reconstruction than GAN. A straightforward way to boost RefSR is to use LR and Ref images as conditions for the diffusion model. To effectively utilize the reference information, some methods inject Ref information into the blocks of the denoising networks. However, they implicitly model the relationship between LR and Ref images for denoising, leading to ambiguous usage of Ref information and content fidelity limitation.

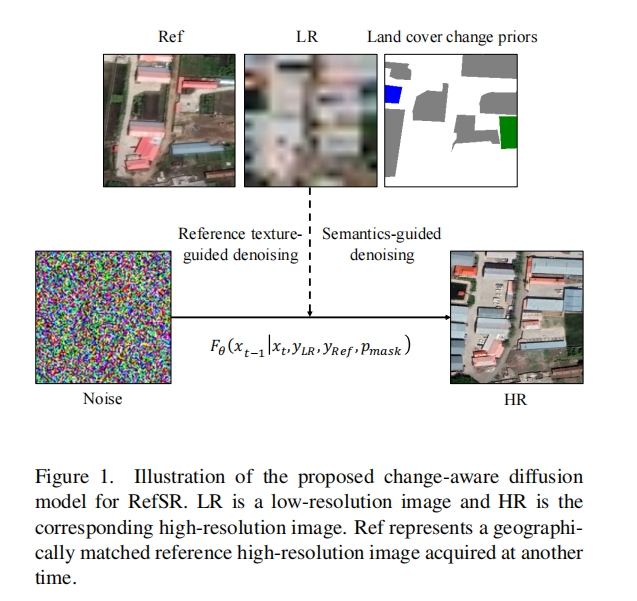

To alleviate the above issues, we introduce land cover change priors to improve the effectiveness of reference feature usage and the faithfulness of content reconstruction (as shown in Figure 1). Benefiting from the development of remote sensing change detection (CD), we can use off-the-shelf CD methods to effectively capture land cover changes between images of different spatial resolutions. On the one hand, the land cover change priors enhance the utilization of reference information in unchanged areas. On the other hand, the changed land cover classes can guide the reconstruction of semantically relevant content in changed areas. Furthermore, according to the land cover change priors, we can decouple the semantics-guided denoising and reference texture-guided denoising in an iterative way to improve the model performance. To illustrate the effectiveness of the proposed method, we perform experiments on two datasets using two large scaling factors. Our method achieves state-of-the-art performance. In summary, our contributions are summarized as follows:

• We introduce the land cover change priors in RefSR to improve the content fidelity of reconstruction in changed areas and the effectiveness of texture transfer in unchanged areas, building bridges across spatial and temporal resolutions in remote sensing scenarios.

• We propose a novel RefSR method named Ref-Diff that injects the land cover change priors into the conditional diffusion model by the change-aware denoising model, enhancing the model’s effectiveness in largefactor super-resolution.

• Experimental results demonstrate that the proposed method outperforms the existing SOTA RefSR methods in both quantitative and qualitative aspects.